AI Cloud Training Cost Estimator

Estimated Cost:

$0

Why this matters:

The article notes that 70B-parameter models cost ~$1.2M on AWS (as referenced in the article). This barrier creates a "wall" for startups and researchers since independent teams can't compete with cloud providers that also build their own models. The cloud infrastructure dominance (65% market share by AWS, Azure, GCP) creates conflicts of interest where gatekeepers favor their own AI products.

By 2025, artificial intelligence isn't just changing how we work-it's rewriting the rules of competition. Regulators around the world are scrambling to catch up as a handful of tech giants control the data, the models, and the cloud power needed to build the next generation of AI. This isn't about monopolies in the old sense. It's about AI systems that grow stronger the more they're used, the more data they swallow, and the more infrastructure they lock down. And that’s raising serious antitrust questions.

Why AI Is Different from Past Tech Booms

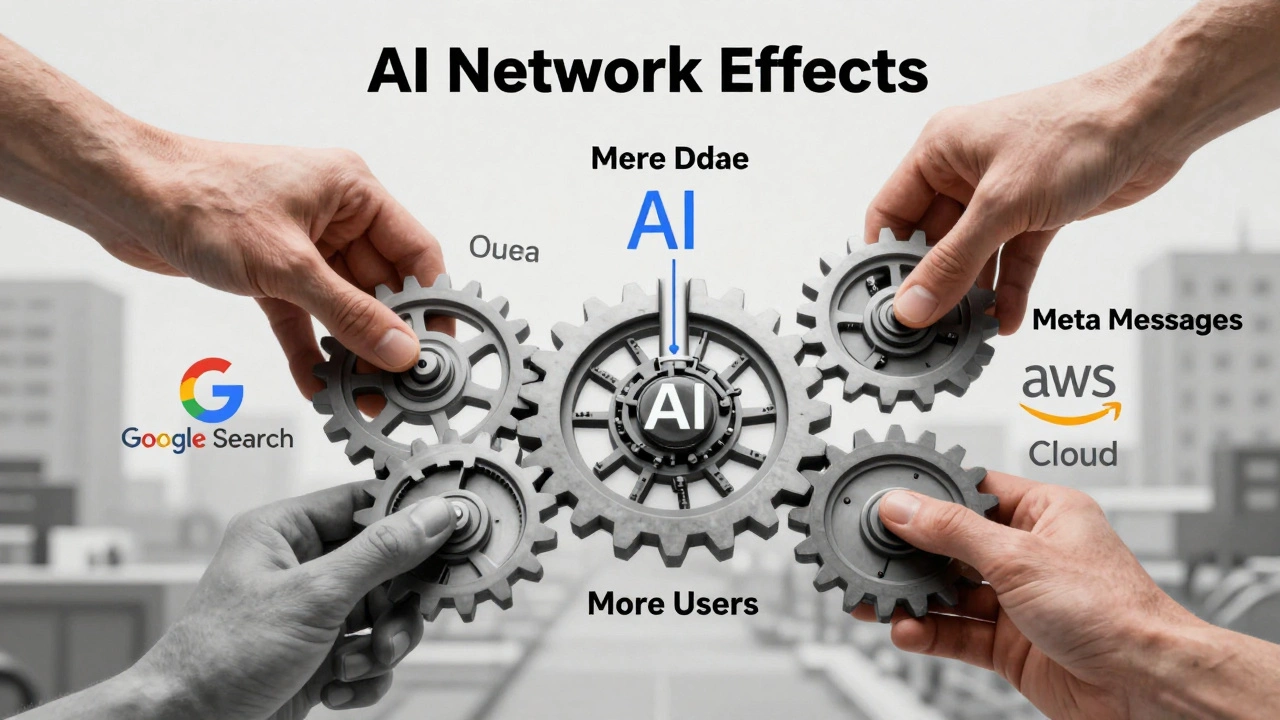

Think back to the early days of search engines or social media. Even if one company dominated, others could still build alternatives using open standards or public data. Today, that’s nearly impossible. Training a large AI model like GPT-4 Turbo or Gemini Ultra requires billions of data points, thousands of high-end GPUs, and access to cloud platforms that only a few companies own. The result? A feedback loop: more data → better models → more users → more data. Competitors can’t catch up, even with the same code.

Take Google. It controls 92% of global search and YouTube, which accounts for nearly a quarter of all internet video traffic. That’s not just a big audience-it’s a goldmine of training data. The European Commission opened an investigation in June 2025 to see if Google is violating antitrust rules by using content from third-party websites and YouTube videos to train its Gemini model without paying for it. If true, that’s not innovation-it’s leveraging dominance to crush the competition before it even starts.

Data Network Effects: The Invisible Moat

Network effects aren’t new. Facebook grew because everyone was on it. But AI network effects are different. They’re not about users connecting to each other-they’re about data feeding models that get smarter, which then attract more users and more data. It’s a closed loop, and only companies with massive datasets can enter it.

Meta’s October 2025 policy change-blocking AI companies from using tools that let businesses interact with customers on its platforms-is a textbook example. By restricting access to user messages, reviews, and comments, Meta isn’t just protecting privacy. It’s making it harder for rivals to train models that understand real human behavior. Without that data, even the best AI startups can’t build competitive chatbots or customer service tools.

And it’s not just social media. Financial services, healthcare, and logistics firms are feeding their AI systems with proprietary transaction logs, patient records, and supply chain data. These aren’t public datasets. They’re private assets, and the companies that own them are building moats no open-source model can scale over.

Cloud Infrastructure: The New Gatekeepers

Even if you could get the data, you still need the power to train it. And that’s where Amazon, Microsoft, and Google come in. Together, they control 65% of the global cloud market. AWS holds 32%, Azure 22%, and Google Cloud 11%. For most AI developers, there’s no real alternative.

Training a 70-billion-parameter model on AWS costs about $1.2 million, according to developers on Reddit. That’s not a barrier-it’s a wall. Independent researchers, small startups, and universities simply can’t compete. And cloud providers aren’t neutral hosts. They’re also building their own AI models-Amazon’s Titan, Microsoft’s Phi, Google’s Gemini-and pushing them through their own platforms. That creates a conflict of interest: why would AWS recommend a rival’s AI model when it can sell its own, faster and cheaper, through its cloud?

Worse, many cloud APIs are locked down. A TechCrunch survey of 200 AI executives in September 2025 found that 78% struggled to integrate third-party models into their cloud systems. Why? Proprietary formats, undocumented APIs, and hidden fees. It’s like being forced to buy a specific brand of fuel for your car-even if another one works better.

EU vs. US: Two Opposite Paths

Europe and the U.S. are heading in opposite directions. The EU is moving fast, with strict rules. The AI Act, which took full effect in 2025, classifies AI systems by risk. High-risk models-like those used in hiring, policing, or healthcare-must be audited, explainable, and transparent. The Digital Markets Act (DMA) now lets regulators treat big AI firms as “gatekeepers,” forcing them to share data and allow interoperability.

But there’s a cost. A survey by the European Digital SME Alliance in August 2025 found that medium-sized AI companies spend an average of €285,000 per year just to comply. Many can’t afford it. Some have shut down. Others moved operations outside the EU.

The U.S., meanwhile, took a different turn in July 2025 with the White House’s America’s AI Action Plan. It explicitly told the FTC to review and potentially overturn past antitrust cases that “unduly burden AI innovation.” The goal? Remove barriers. Encourage open-source models. Let the market decide.

But here’s the problem: the market isn’t fair. Microsoft bought Inflection AI. Google bought Character.AI. Both deals were below the $100 million HSR filing threshold, so regulators didn’t even get a chance to review them. Now, the DOJ is investigating whether these “acquihires” are just ways to kill potential competitors before they grow. The U.S. is waiting for harm to happen. Europe is trying to stop it before it starts.

What’s at Stake for Businesses and Developers

If you’re building an AI product today, you’re caught in the middle. In Europe, you might need to document every training data source, allow third-party audits, and make your model explainable. In the U.S., you might avoid regulation-but still face lawsuits if you’re seen as monopolizing data or cloud access.

Open-source models like Mistral 7B and Llama 3 70B are promising, but they still lag behind proprietary models by 15-20% on benchmark tests like MMLU. Why? Because they don’t have the same scale of data. Even if you download the code, you don’t get the data that made it powerful.

And for enterprise users? Integration is a nightmare. A McKinsey survey in August 2025 found that financial services lead in AI adoption at 78%, but many still use fragmented tools because they can’t connect models across platforms. The dream of a unified AI ecosystem? It’s still out of reach.

The Future: Regulation Can’t Keep Up

The global AI market hit $454 billion in Q3 2025, with generative AI making up nearly half. But the top five tech firms-Microsoft, Google, Meta, Amazon, Apple-control 63% of all AI patents filed that year. That’s not competition. That’s consolidation.

Experts like Yale’s Fiona Scott Morton say current antitrust laws are “fundamentally ill-equipped” for AI. Traditional tools like market share and price analysis don’t work when the product is free and the currency is data. The FTC’s Lina Khan admitted the agency is building new tools to measure innovation speed and data access as competitive factors.

By 2026, Forrester predicts 45% of all AI antitrust cases will focus on data access-up from 18% in 2024. Morgan Stanley warns that if regulators force data sharing, AI service margins could drop 15-25% by 2027. That’s not just a business risk-it’s a systemic one.

The question isn’t whether regulation will come. It’s whether it will come too late. If we wait until one company owns every AI model, every dataset, and every cloud, then innovation won’t be stifled-it will be owned.

What makes AI different from other technologies in terms of antitrust?

Unlike past tech, AI thrives on data network effects: the more data a model has, the smarter it gets, which attracts more users and more data. This creates a self-reinforcing cycle where early leaders become nearly impossible to challenge. Traditional antitrust tools like price or market share don’t capture this dynamic, because AI services are often free and competition is about data access, not pricing.

Why are cloud providers a problem for AI competition?

Amazon, Microsoft, and Google control over two-thirds of the cloud market and also build their own AI models. This creates a conflict: they can favor their own models by offering better pricing, faster integration, or exclusive features. Smaller AI developers can’t compete on infrastructure costs, and many can’t even integrate third-party models due to proprietary APIs. This turns cloud providers into gatekeepers who decide who gets to play.

Is open-source AI a solution to monopolies?

Open-source models like Llama 3 and Mistral are important, but they’re not enough. They lack the scale of proprietary models because they don’t have access to the same proprietary datasets. Training a top-tier model still requires billions of data points-most of which are held by Google, Meta, or Microsoft. Without access to that data, even the best open-source code can’t match performance.

What’s the EU’s AI Act doing to change the game?

The EU AI Act treats high-risk AI systems like medical or hiring tools as regulated products. Companies must document their training data, allow audits, and ensure transparency. The Digital Markets Act also forces gatekeepers to share data and allow interoperability. But compliance is expensive-€285,000 a year for medium firms-and many smaller players are being pushed out of the market.

Why are U.S. regulators letting big tech buy AI startups?

Many AI acquisitions, like Microsoft buying Inflection AI or Google buying Character.AI, were below the $100 million threshold for mandatory review. U.S. antitrust laws rely on post-hoc enforcement, meaning regulators act only after harm is done. Now, the DOJ is investigating whether these deals are “killer acquisitions”-buying potential rivals before they grow. But the system is still too slow for fast-moving AI markets.

What happens if regulators force companies to share their data?

If regulators require data sharing, AI-as-a-service margins could drop by 15-25% by 2027, according to Morgan Stanley. That could reduce profits for big tech but might open the market to new entrants. The trade-off is between innovation incentives and fair competition. No one knows the exact outcome-but the pressure to act is growing.